Wataru Ikeda

1st Year Master's Student

Tohoku University

I'm a 1st year master's student at Tohoku University. My research interests include Natural Language Processing and Knowledge of language models.

News

Nov 26, 2025

Prize Winner of NeurIPS 2025 Workshop

Our team has been selected as a prize winner in the MMU-RAG NeurIPS 2025 Competition.

Jul 7, 2025

Paper accepted at COLM 2025

Our paper, "Layerwise Importance Analysis of Feed-Forward Networks in Transformer-based Language Models," has been accepted at COLM 2025.

Mar 13, 2025

言語処理学会第31回年次大会にて若手奨励賞を受賞しました

言語処理学会第31回年次大会にて、「Transformer LLMにおける層単位のFFN層の重要度検証」により若手奨励賞を受賞しました。

Education

Publications

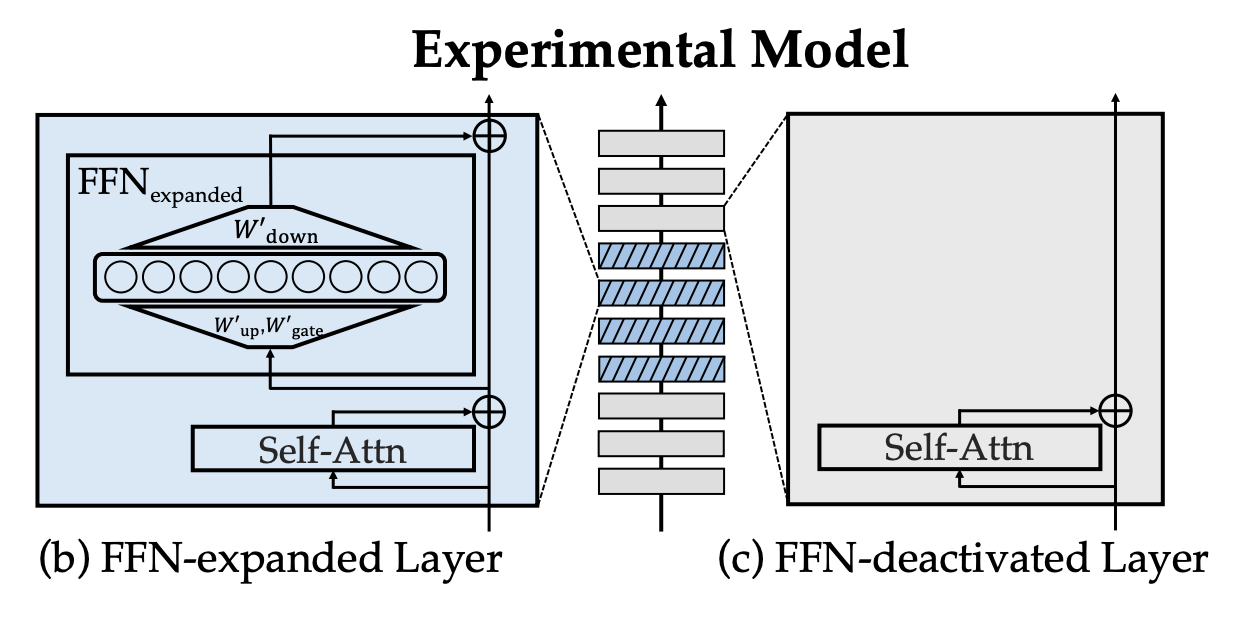

Conference on Language Modeling (COLM) 2025

Layerwise Importance Analysis of Feed-Forward Networks in Transformer-based Language Models

Wataru Ikeda, Kazuki Yano, Ryosuke Takahashi, Jaesung Lee, Keigo Shibata, Jun Suzuki

Layerwise importance analysis of FFNs through selective FFN deactivation during pretraining.

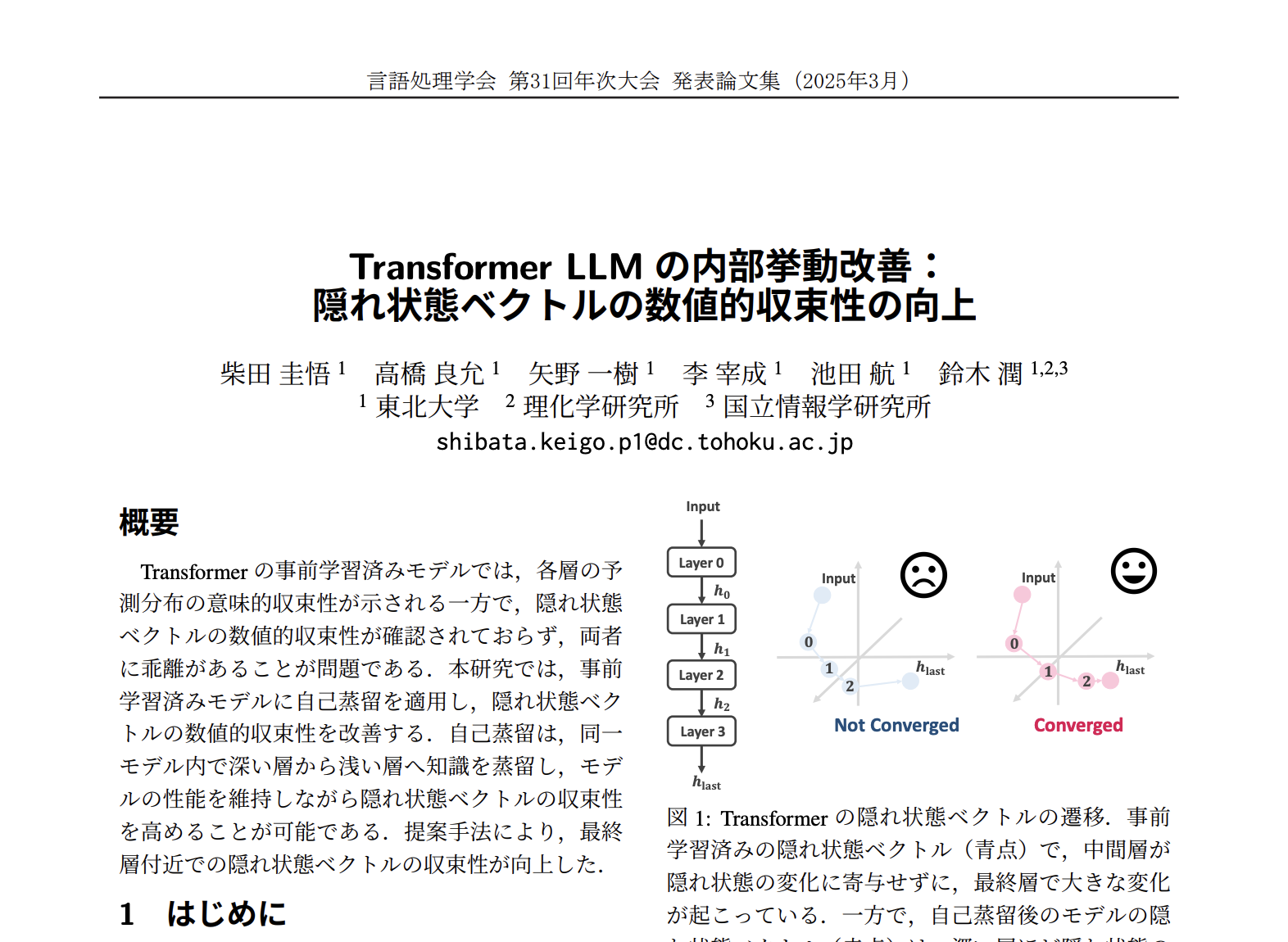

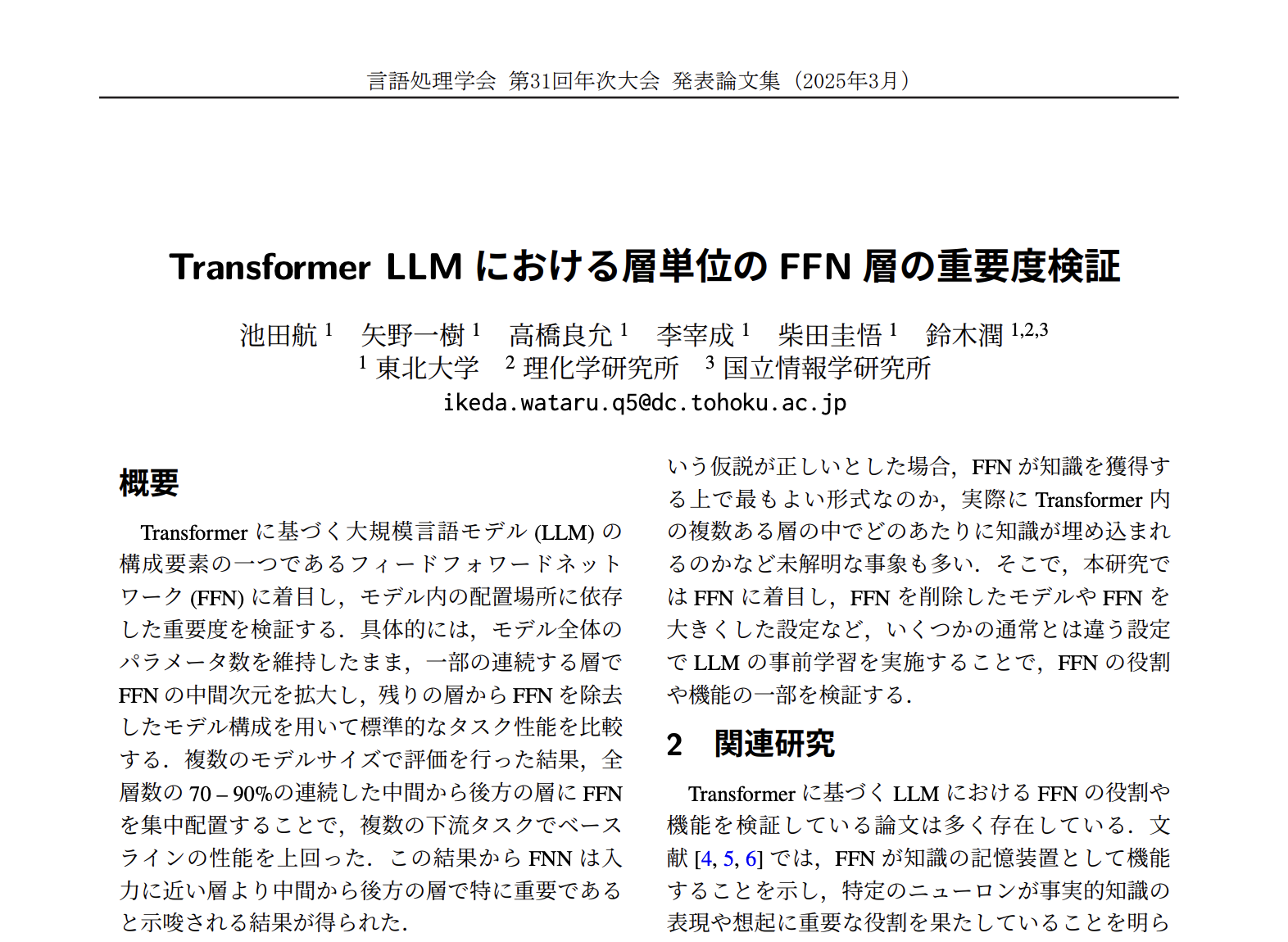

言語処理学会第31回年次大会 2025

🏆 若手奨励賞 [20/487]

Transformer LLMにおける層単位のFFN層の重要度検証

池田航, 矢野一樹, 高橋良允, 李宰成, 柴田圭吾, 鈴木潤